From Animal Speech to AI-Powered Robots: The Next Leap in Natural Language Understanding

We’re witnessing something remarkable in tech right now. The boundaries between human and machine communication are blurring faster than anyone predicted. What started as simple chatbots has evolved into AI systems that can decode animal languages, power conversational travel booking, and even give robots the ability to understand us like never before.

It’s not just another incremental upgrade. We’re talking about a fundamental shift that’s reshaping everything from how we interact with our devices to how we understand communication itself.

When Sound Becomes Physical

Here’s something that’ll make you think twice about the power of natural signals. Scientists recently discovered that a baby’s cry can literally make adults physically hotter. Using facial temperature measurements, researchers found that when adults hear babies crying with complex, chaotic patterns (what scientists call non-linear phenomena), their bodies respond with a measurable flush.

This wasn’t just a psychological response. It was consistent across genders and especially strong when the cries conveyed pain or urgency. What makes this relevant to tech? It shows us that natural sound signals, refined by millions of years of evolution, are designed to cut through noise and demand immediate attention. That’s exactly what today’s AI systems are learning to emulate.

Cracking the Code of Animal Communication

If you think decoding human language is complex, try figuring out what whales are saying to each other. Researchers are now using AI to tackle exactly that challenge. According to Nature, some animals like primates, whales, and certain birds show vocalizations that look surprisingly similar to human language structures.

These aren’t random sounds. We’re seeing compositional structures, recognizable patterns, and even signs of productivity where animals construct new calls from familiar sounds. The missing piece? Animals still can’t communicate about things distant in time or space the way humans do.

That’s where advanced AI tools step in. Natural language processing models are now scouring terabytes of animal calls, hunting for meanings and communication patterns that human ears simply can’t catch. We’re not chatting with dolphins yet, but the trajectory is clear.

LLMs Transform Business Communication

While researchers decode animal languages, businesses are seeing immediate returns from natural language AI. Take the insurance industry, where precision matters and miscommunication can cost millions.

Large language models are revolutionizing actuarial work, handling tasks that traditionally required specialized expertise. These aren’t just fancy chatbots. We’re talking about AI that can interpret customer queries in plain language, assess risk factors, clarify complex policies, and ensure regulatory compliance.

For developers and crypto traders who’ve dealt with DeFi protocols, this might sound familiar. Just like how smart contracts simplified blockchain interactions, LLMs are removing friction from traditional business processes. The difference? Instead of learning Solidity, customers can just ask questions in everyday language.

Travel Gets Conversational

Here’s where things get really interesting for consumers. Travel booking is about to become as simple as telling a friend about your vacation plans. Kayak is launching an AI-powered search tool that lets you plan trips using everyday language.

Forget rigid search forms with dropdown menus. You’ll be able to type or say something like “I want a beach vacation in Europe next month, somewhere warm but not too expensive.” The AI interprets your conversation, navigates through millions of flights, hotels, and experiences, then serves up results that actually make sense.

The real challenge isn’t the tech sophistication. It’s trust. Will people feel comfortable letting AI make complex travel decisions? Early adopters will likely love the hands-free, personalized experience. But mainstream adoption depends on proving these systems can handle the nuances of real-world travel planning.

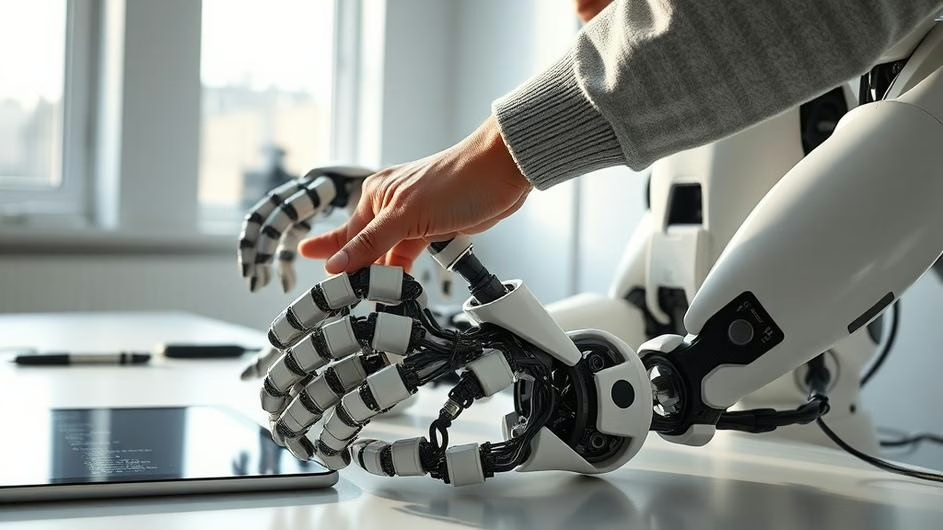

Robots Learn to Speak Our Language

Now we’re getting to the really exciting part. September 2025 brought us something significant: OpenMind’s OM1 operating system for robots. This isn’t just another robotics platform. It’s the first open-source, robot-agnostic OS built specifically around language models.

What does that mean practically? Robots running OM1 can perceive, reason, and act with unprecedented flexibility. Whether it’s a warehouse bot, a humanoid assistant, or something completely new, they can all understand and respond to natural language commands.

The open-source aspect is crucial here. Just like how blockchain’s open nature drove innovation, OM1’s accessibility is breaking down proprietary barriers. Developers worldwide can now collaborate, create interoperable systems, and push robotics forward at lightning speed.

What This Means for Tech’s Future

We’re looking at a fundamental shift in human-machine interaction. From recognizing emotional distress in a baby’s cry to having open-ended conversations with travel apps, from understanding animal communication to deploying robots that get our natural speech patterns, language is becoming the universal interface.

For developers, this represents massive opportunity. Natural language understanding isn’t sci-fi anymore. It’s the connective tissue linking software, hardware, and even biological systems. The companies and developers who master these technologies first will have serious advantages in user experience, automation, and robotics.

Think about the implications for Web3 and crypto. Complex DeFi interactions could become as simple as saying “stake my ETH in the highest-yield protocol with acceptable risk.” NFT marketplaces could respond to “show me affordable art from emerging digital artists.” The barriers between technical complexity and user adoption start disappearing.

The Conversational Revolution

What we’re seeing isn’t just incremental improvement in chatbots. It’s the emergence of language as the primary interface between humans and technology. The same AI breakthroughs helping us understand whale songs are powering insurance automation, travel planning, and robot operation.

For investors and technologists, the message is clear. Companies building natural language capabilities into their core products aren’t just following a trend. They’re positioning themselves for a future where conversation, not configuration, becomes the norm.

As LLMs become more sophisticated and platforms like OM1 democratize access to language-powered robotics, we might soon find ourselves in a world where talking to our devices feels as natural as talking to each other. The question isn’t whether this future will arrive, but how quickly we can adapt to it.

The revolution in natural language understanding is happening now. The companies, developers, and investors who recognize its potential today will be the ones shaping tomorrow’s conversational world.

Sources

- The Sound of a Baby Crying Can Make Adults Physically Hotter, ScienceAlert, September 14, 2025

- LLMs hold promise for the actuarial field, InsuranceNewsNet, September 17, 2025

- Kayak Plans to Launch Natural Language Search in Two Weeks, CEO Says, Skift, September 17, 2025

- AI is helping to decode animals’ speech. Will it also let us talk with them?, Nature, September 17, 2025

- OpenMind launches OM1 Beta open-source, robot-agnostic operating system, The Robot Report, September 18, 2025