CES 2026: When AI Left the Cloud and Entered the Real World

Walking through the Las Vegas Convention Center this year, you couldn’t help but feel something had shifted. This wasn’t just another trade show with flashy demos and empty promises. It felt more like a dress rehearsal for a future that’s already knocking at our door. Artificial intelligence, once confined to chat windows and dashboard analytics, has broken free. It’s spilling into factories, cars, robots, and devices that fit in your pocket.

The familiar players were all there, of course. NVIDIA, AMD, Amazon, and Samsung commanded their usual attention. But the story they were telling had changed. The focus wasn’t just on bigger models or faster cloud services. It was on convergence, on marrying those powerful AI systems with the messy, physical constraints of real hardware. The goal? Building experiences that work predictably in the unpredictable real world.

The Hardware Foundation: Silicon Gets Serious

One clear track at CES 2026 was all about raw compute power. Companies showed off the next generation of silicon and system platforms designed to make real-time perception and control not just possible, but practical. NVIDIA and AMD drove headlines with their latest chip announcements and OEM partnerships. Meanwhile, PC makers and gaming brands teased machines that fold, roll, and expand, giving you more screen real estate when you need it most.

Why does this matter for the average user or developer? It’s simple. These advances slash latency and stretch battery life for AI tasks. The process of inference, running a trained model to get an output, is moving from distant servers into the device in your hand or on your desk. This shift toward edge intelligence is a game-changer, and it was on full display.

AI in Action: From Meeting Rooms to Backyard Birds

The other track was less about the silicon itself and more about what you can actually do with it. Vendors demonstrated how AI can quietly augment our daily routines. Take Plaud, for instance. This small hardware AI company launched the NotePin S, an AI pin designed for meetings, alongside a desktop notetaker app. The system transcribes and summarizes calls without needing a virtual bot to join the conversation.

Think of an AI pin as a smart wearable badge that listens for context and offers help on demand. Plaud’s approach shows how lightweight hardware and clever software can team up, turning raw speech into concise notes and actionable tasks. For distributed teams where keeping track of decisions is crucial, tools like this could be transformative. You can read more about Plaud’s new offerings in TechCrunch’s coverage.

The impulse to embed intelligence showed up in more surprising places, too. Birdbuddy 2 can identify birds by their song alone, a perfect example of a small, purpose-built device using on-device audio models to deliver a simple, delightful experience. Sony used its stage to highlight Afeela, its electric vehicle project with Honda, reminding everyone that modern cars are becoming platforms for continuous AI updates. And Samsung’s Galaxy Z TriFold made it clear that mobile hardware innovation is far from dead, it’s just being repurposed for immersive, AI-augmented multitasking.

Gaming, AR, and the Portable Big Screen

Gaming hardware at CES pointed toward a future of portable, big-screen ambition. We saw new handheld chips tuned for sustained performance, AR glasses that promise to turn a park bench into a giant virtual monitor, and gaming laptops with rollable, expanding displays that could redefine immersion.

Augmented reality, which overlays digital content onto your view of the physical world, is reaching a new level of practicality. When you pair it with local compute power and low-latency input, it stops being a gimmick and starts feeling like a believable, useful extension of your workspace or play space. The progress in AR and spatial computing was impossible to miss.

The Cloud Players Stitch the Fabric

While the spotlight was on devices, the big cloud companies were busy working in the background, stitching everything together. Amazon moved Alexa onto the open web with Alexa.com, making voice assistants more platform-agnostic. Google previewed new Gemini features for TVs, signaling that the living room remains a central battleground for how we’ll interact with multimodal AI.

These moves point toward a hybrid future, one that tech analysts have been predicting. Cloud services will handle the heavy lifting, training, and massive updates, while local agents on devices manage immediacy, context, and privacy. It’s not an either-or proposition anymore.

Weird, Wonderful, and Instructive Hardware

CES has always been a laboratory for product thinking, and 2026 was no exception. From robotic oddities to the Clicks Communicator, a device that garnered attention for its focus on tactile, hands-on interaction, the show proved that experimentation is still the fastest path to useful refinement.

Companies are throwing countless form factors and user interfaces at the wall to see what actually sticks and improves daily life. Not every weird gadget will survive, but these experiments accelerate collective learning about what users truly want and need. As Engadget’s live updates noted, the diversity of concepts on display was staggering.

What This Means for Builders and Developers

For developers and product teams watching from the sidelines, the implications are immediate and tangible. There’s going to be intense pressure to optimize AI models for constrained environments, to fine-tune for both latency and energy consumption, and to completely rethink user flows so that AI feels helpful rather than distracting.

Tools that compile and compress models, along with frameworks that let you seamlessly push code across cloud and edge deployments, will be in high demand. Integration challenges will multiply as devices with wildly different capabilities all need to deliver consistent, explainable behavior. And let’s not forget the standards. Questions around privacy, data ownership, and model accountability will become even more critical as AI inference moves into sensitive spaces like cars, meeting rooms, and our homes.

This aligns with the broader shift in developer priorities we’ve been tracking, where the focus is moving from pure capability to responsible, efficient deployment.

Navigating the Tradeoffs

This distributed AI future isn’t without its complications. Moving compute to the device can protect user privacy and eliminate network lag, but it also fragments capabilities. Not every smartwatch or earbud can host the same massive model that runs in a data center. Cloud services, on the other hand, offer incredible scale and the ability to push frequent improvements, but that centralization raises legitimate questions about dependence, control, and single points of failure.

The most compelling products of the next few years won’t choose one side. They’ll be the ones that masterfully combine both approaches, using cloud models for heavy training and large-scale updates while leveraging device-side inference for contextual, immediate utility. Getting this balance right is the new frontier for product design.

The Checkpoint, Not the Destination

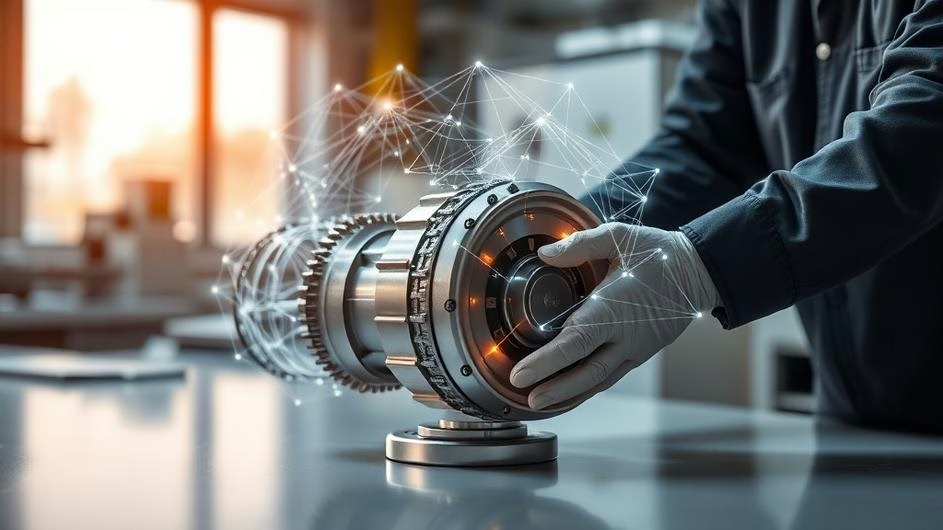

CES 2026 didn’t feel like a final destination. It felt like a crucial checkpoint. The era of AI as a purely cloud-based novelty is clearly giving way to something more substantial, an era where intelligence becomes a distributed substrate. It’s embedded in the objects around us, shaped by physical limits, and designed to interact with the real world.

That means better, less frustrating experiences in virtual meetings. It means more capable and adaptable robots on factory floors. It means vehicles that can understand and react to their surroundings in real time. And it means a new wave of consumer gadgets that manage to be both genuinely playful and seriously practical.

Looking ahead, the next wave of innovation will be about polishing the seams. Expect more attention on developer ergonomics, better tooling for cross-tier deployment, and design patterns that prioritize user trust and interface clarity. The most interesting products will come from teams that stop thinking of hardware and software as separate entities. They’ll treat hardware as a fundamental feature and software as the amplifier that brings it to life.

CES 2026 gave us a clear, detailed sketch of that future. The rest of this year will be about turning those prototypes and promises into products that feel not just innovative, but inevitable. For a deeper dive into the trends that emerged, TechCrunch’s recap is essential reading.

What’s your take? Is moving AI to the edge the right path, or does it create more problems than it solves? For developers, is the added complexity of hybrid models worth the payoff in user experience? The conversation is just getting started, and as the physical AI revolution continues to unfold, we’ll be here to track every development.

Sources

- TechCrunch, CES 2026: Follow live as NVIDIA, Lego, AMD, Amazon, and more make their big reveals, January 5, 2026

- TechCrunch, Plaud has a new AI pin and a software notetaker, January 5, 2026

- Engadget, CES 2026: Live updates on Lego, Samsung, NVIDIA, Dell and more, January 5, 2026

- TechCrunch, Recapping everything we’ve seen so far at CES 2026, January 9, 2026

- AOL.com, Tech Trends That Will Define 2026 – IGN x CNET Group CES Special Report, January 11, 2026