CES 2026: When AI Steps Off the Screen and Into the World

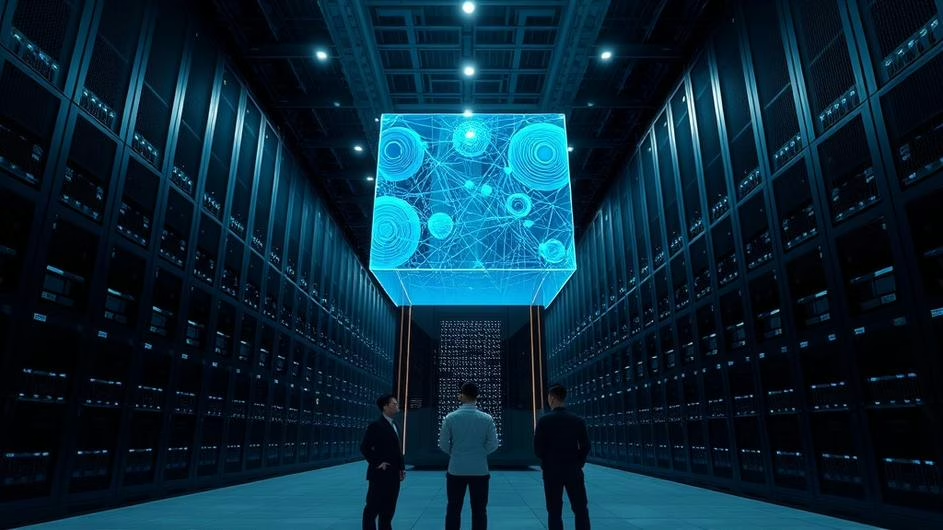

Walking the show floor in Las Vegas this year, you couldn’t help but feel something had shifted. This wasn’t just another tech showcase with flashy prototypes and marketing hype. CES 2026 felt different, more like a dress rehearsal for a world where artificial intelligence doesn’t just live in the cloud or on your phone screen, but in the physical objects around you. The usual spectacle was there, sure, but beneath the surface a clearer story was emerging, one that connected announcements from chip giants, TV manufacturers, toy makers, and robotics startups.

The real narrative wasn’t about more computing power or brighter displays. It was about convergence, how AI is beginning to orchestrate hardware, software, and everyday items into systems that actually adapt and respond in real time. If you’ve been following the evolution of consumer AI, this shift shouldn’t come as a complete surprise, but seeing it play out across so many product categories made the trend impossible to ignore.

The Robots Are Here, and They’re Actually Useful

Robots were everywhere at CES 2026, in forms ranging from the practical to the downright surreal. On the practical side, companies like Narwal made waves with their Flow 2 robotic mop, which they’re calling their most advanced cleaning robot yet. Its presence underlined a quiet revolution that’s been building: robot vacuums are graduating from novelty gadgets to legitimate home appliances that can vacuum, mop, and map your living space with genuine autonomy.

Similarly, products like the SwitchBot Onero H1 caught attention because they represent machines that might actually find a real foothold in homes. They combine useful automation with sensible pricing and integration, something that’s been a stumbling block for many smart home devices. It’s part of a broader wave of consumer AI tools that are moving beyond gimmicks to deliver tangible value.

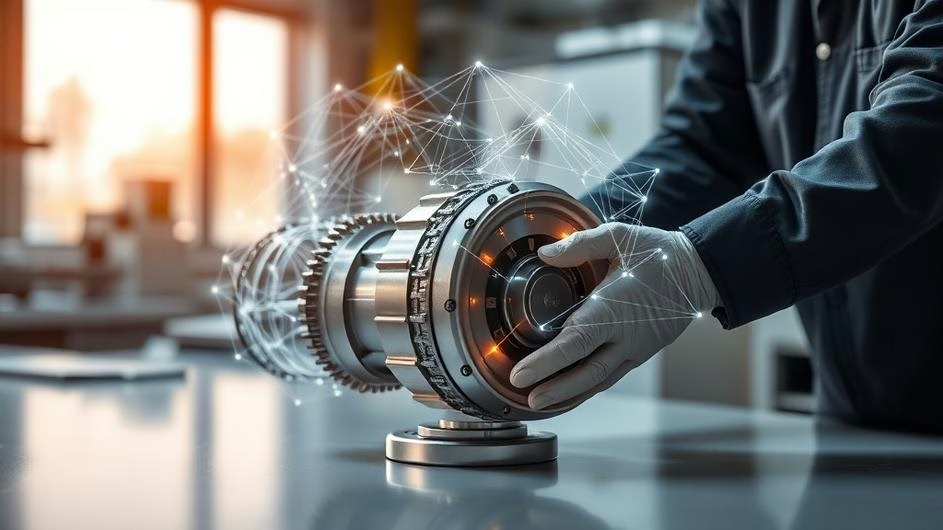

The robotics section had its share of theater too, from humanoid bots demonstrating laundry folding to devices designed for hotel service and retail. Those demos reveal two important trends. First, physical robots are benefiting from better sensing and on-device inference, which cuts down latency and allows for more natural interactions. Second, companies are increasingly designing robots for specific tasks rather than chasing one-size-fits-all generality. That focus on utility makes sense when true general intelligence remains out of reach.

Smart Home Gets Smarter, and Stranger

The smart home category expanded in some genuinely surprising directions this year. Lego took the stage with its new Smart Brick, signaling that toys won’t just teach kids to build physical structures, but will also serve as accessible hardware platforms for learning about sensors and programmable behaviors. It’s a clever move that bridges education and entertainment while introducing the next generation to basic programming concepts.

Meanwhile, oddities like bone conduction lollipops and AI-powered hair clitters surfaced on the periphery, reminding everyone that CES remains a playground for imagination. Sometimes those playful experiments point toward genuinely useful innovations, even if they look strange at first glance. As hardware continues to evolve, we’re seeing more experimentation with form factors and interfaces that challenge our assumptions about what technology should look like.

Your TV Wants to Be Your Assistant

Television makers continued to push the boundaries of what a TV can be, treating the screen less as a passive display and more as an ambient computing surface. LG’s Wallpaper TV captured attention for its design that minimizes visual bulk, while TCL and others previewed features like Max Ink Mode for low-power, paper-like displays that could change how we think about screen time.

Google doubled down on the idea that TVs should be smart assistants, with Gemini on Google TV getting an update aimed at richer visuals and voice-controlled settings. The so-called Nano Banana, a more visually engaging mode for the assistant, and expanded voice control on select TCL sets show how AI on TV is shifting from passive search and casting to proactive, contextual interactions. For developers, that means building interfaces that anticipate user intent while respecting the living room context, where interruptions and privacy concerns are always front of mind.

Gaming and High-Performance Computing Find Their Moment

Gaming hardware and high-end computing also had their moments in the spotlight. ASUS showcased entries like the ROG Zephyrus Duo, underlining that high-performance, AI-augmented workflows matter not just for gamers but for creators who rely on powerful local compute for real-time rendering and media production. It’s part of a broader trend we’ve been tracking in our coverage of CES 2026 and manufacturing moves that are reshaping consumer AI.

At the platform level, companies like Nvidia and AMD are pushing silicon specifically designed to accelerate AI workloads. This hardware foundation supports everything from autonomous vehicle sensors to edge inference in consumer devices, creating a virtuous cycle where better chips enable more sophisticated AI applications, which in turn drive demand for even better chips.

The Weird and Wonderful Side of CES

If CES 2026 had a wildcard category, it was definitely the collection of strange and wonderful devices that make you smile first, then think. ZDNet’s roundup of the seven weirdest gadgets included everything from bite-sized music players that use bone conduction to humanoid laundry-folders. These products matter because they expose us to unfamiliar interfaces and interaction paradigms. Sometimes a playful experiment reveals a customer need or interaction method that becomes mainstream years later.

Remember when voice assistants seemed like science fiction? Or when touchscreens were novelty items? Many of today’s essential technologies started as someone’s “weird” idea at a trade show. That’s why paying attention to these outliers matters, even if they don’t seem immediately practical.

What This Means for Developers and Tech Leaders

So what does all this add up to for the people actually building this technology? For starters, the integration layer is becoming the real battleground. It’s no longer enough to build a best-in-class model or a great widget. Success will increasingly depend on how well your software coordinates sensors, local inference, cloud services, and user interfaces. That raises tough questions about latency, privacy, and over-the-air updates, while creating new opportunities for modular, secure middleware solutions.

Second, hardware design is getting smarter by default. TVs, vacuums, toys, and robots are shipping with more capable edge AI, which reduces dependence on faraway servers and makes experiences more responsive. Writing code for this world requires paying attention to constraints like power consumption, intermittent connectivity, and the need for graceful degradation when models fail or connections drop.

Finally, the convergence of AI and the physical world demands a renewed focus on standards and safety. Autonomous systems that interact with people and property need predictable behavior, transparent failure modes, and clear mechanisms for human override. These aren’t just engineering challenges, they’re policy and product decisions that will fundamentally shape user trust in these technologies.

If you’re wondering how this plays out for different stakeholders, consider this breakdown:

| Stakeholder | Key Implications | Opportunities |

|---|---|---|

| Developers | More work at system boundaries, need for edge optimization | New middleware tools, specialized AI models for devices |

| Users | More responsive devices, reduced cloud dependence | Better privacy, lower latency, more reliable performance |

| Investors | Shift from pure software to hardware-software integration | Edge computing infrastructure, specialized AI chips |

| Policymakers | New safety and privacy concerns with physical AI | Framework development for autonomous system regulation |

The Road Ahead: Integration Is Everything

CES 2026 didn’t deliver any single breakthrough that will remake the industry overnight. Instead, it offered a clearer view of how many incremental advances, across silicon, software, and human-centered design, are assembling into a world where AI is less of an app feature and more of a connective tissue between devices and services. For developers, that means more work at system boundaries, more opportunities to craft compelling experiences, and a responsibility to build with safety and privacy in mind from the start.

Looking ahead, expect the coming year to be all about integration. We’ll see more devices that behave like services, and more services that need to understand the physical context in which they operate. The winners will be teams that treat AI not as bolt-on magic, but as infrastructure for interaction, reliability, and user empowerment. As AI leaves the cloud and starts living in our everyday objects, the tradeoffs we’ll need to solve to make this future both useful and humane are coming into sharper focus.

The question isn’t whether AI will continue moving into physical devices, it’s how quickly and how well. Based on what we saw at CES 2026, that transition is already well underway, and it’s going to reshape everything from how we clean our homes to how we interact with entertainment. The companies that understand this shift, and build products that respect both the technical constraints and human needs, will be the ones defining the next chapter of consumer technology.

Sources

TechCrunch, CES 2026: Follow live as NVIDIA, Lego, AMD, Amazon, and more make their big reveals, TechCrunch, Mon, 05 Jan 2026

ZDNET, The 7 weirdest tech gadgets I’ve seen at CES 2026 – so far, ZDNET, Wed, 07 Jan 2026

Engadget, CES 2026: Live updates on the latest gadgets at the biggest tech event of the year, Engadget, Mon, 05 Jan 2026

Mashable, Narwal Flow 2 robovac announced at CES 2026: Specs, features, price, more, Mashable, Sun, 04 Jan 2026

The Verge, Gemini on Google TV is getting Nano Banana and voice-controlled settings, The Verge, Mon, 05 Jan 2026