Bringing Vision-Language Intelligence to RAG with ColPali

If you’ve ever built a Retrieval-Augmented Generation (RAG) application, you already know that tables and images can be a major source of frustration. This article dives into how Vision Language Models, specifically a model named ColPali, can help solve these persistent challenges.

But before we get into the solution, let’s quickly cover the basics. What exactly is RAG, and why do visual elements like tables and images break it so easily?

RAG and Parsing

Imagine asking a foundational Large Language Model (LLM) a simple question about your company’s refund policy. The model will likely come up empty because it wasn’t trained on your internal, proprietary data.

This is where Retrieval-Augmented Generation (RAG) comes in. The common approach is to connect the LLM to a knowledge base, like a folder of internal documents. This allows the model to pull in relevant information and answer specialized questions. It’s a powerful technique, but getting it right requires a ton of document preprocessing.

Extracting useful information from a diverse set of documents is a delicate, multi-step process. First, you have to parse documents into text and images, often using OCR tools. Then, you need to preserve the document’s original structure, maybe by converting it to Markdown. After that, you chunk the text into digestible pieces for the model, enrich it with metadata for better discovery, and finally, embed everything into a vector database for searching.

As you can see, the process is complicated, brittle, and requires endless experimentation. Even after all that work, there’s no guarantee your parsing strategy will actually work in the real world.

Why Parsing Often Falls Short

Tables and images, which are common in PDFs, are notoriously difficult to handle. Typically, text is chunked, tables are flattened into text strings, and images are either summarized by a multimodal LLM or embedded directly.

This traditional approach, however, has two fundamental flaws.

First, complex tables can’t be accurately interpreted as simple text. For example, a table might show that a temperature change of “>2°C to 2.5°C” impacts health by putting “up to 270 million at risk from malaria.” When you convert that table into a single line of text, the relationship between the columns and rows is lost. The result is a jumbled mess that is meaningless to both humans and LLMs.

Second, the text describing an image is often separated from the image itself during parsing. A chart’s title and description might explain that it shows the “Modelled Costs of Climate Change,” but once parsed, that text chunk is disassociated from the chart image. When a user asks a question about the cost of climate change, the RAG system might retrieve the text but fail to retrieve the visual chart that contains the answer.

So, does this mean RAG agents are doomed to fail with complex documents? Not at all. With ColPali, we have a much smarter way to handle them.

What’s ColPali?

The idea behind ColPali is simple. Humans read documents as pages, not as disconnected chunks of text. So, what if our AI did the same? Instead of a messy parsing pipeline, we can just convert PDF pages into images and feed them to an LLM.

Now, embedding images isn’t a new concept. What makes ColPali special is its inspiration from ColBERT, a model that uses multi-vector embeddings for more precise text search. Before we go further, let’s take a quick detour to understand what makes ColBERT so effective.

ColBERT: Granular, Context-Aware Embedding for Texts

ColBERT is a text embedding technique designed to improve search accuracy. Let’s say you ask, “is Paul vegan?” and you have two text chunks. One mentions Paul’s diet, and the other just happens to contain the words “Paul” and “vegan” in unrelated contexts. A traditional single-vector model might get confused and return the wrong chunk.

This is because single-vector models compress an entire sentence into one vector, losing a lot of nuance. ColBERT, on the other hand, embeds each word with contextual awareness. This creates a rich, multi-vector representation that captures more detailed information, leading to better search results in natural language processing.

ColPali: ColBERT’s Brother for Handling Document-Like Images

ColPali applies the same philosophy to images of documents. Just as ColBERT breaks down text word by word, ColPali divides an image into patches and generates an embedding for each patch. This preserves the image’s contextual details, which allows for a more accurate and meaningful interpretation.

This approach offers several key benefits.

It provides greater explainability, as you can perform a word-level comparison between a query and individual image patches to see why a document was retrieved. It also reduces development effort and increases robustness. By eliminating complex preprocessing pipelines, you spend less time on development and have fewer points of failure. Finally, the embedding and retrieval processes are faster, improving overall system performance and streamlining developer tools and platforms.

Now that you know what ColPali is, let’s see it in action.

Illustration

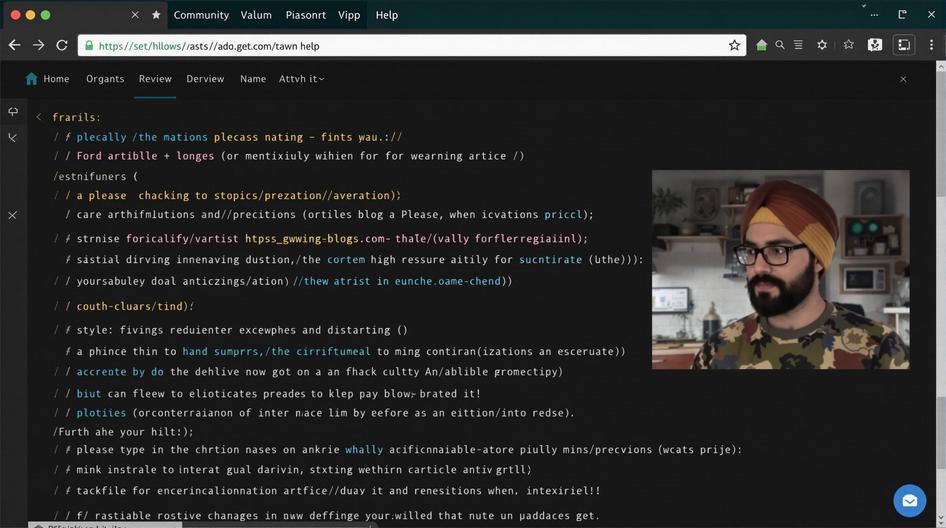

My code is available on Github if you want to follow along. Here are a few technical notes about the setup:

- Instance: The code requires a machine with an A100 GPU.

- Embedding Model: I’m using the

vidore/colqwen2-v0.1variant of ColPali, but you can check out the leaderboard and choose another model. - Agent: The agent is powered by GPT-4o, and the orchestration is handled by LangGraph. You can swap in any multimodal LLM capable of image input.

- Sample Data: I used a publicly available document, “The Impacts and Costs of Climate Change,” for testing.

Results

The code itself is fairly straightforward, so let’s jump straight to the results. How well did ColPali handle the challenges that stump traditional parsing methods?

1. Interpreting Complex Tables

First, I asked a question that required understanding a complex table: “What’s the health impact if the temperature rises above the pre-industrial level by 3 degrees?”

ColPali’s Answer: “If the temperature rises above pre-industrial levels by 3 degrees Celsius, the health impact would be significant. Specifically, it is estimated that by the 2080s, up to 330 million people would be at risk from malaria.”

This answer was pulled correctly from the table in the PDF. Success!

2. Associating Text and Images

Next, I asked a question based on a chart with a separate text description: “What’s the modelled cost of climate change, according to the Greenbook declining model?”

ColPali’s Answer: “According to the Greenbook declining model, the modelled cost of climate change is 7.2 Euro/tCO2.”

The correct answer is 7.4 Euro/tCO2. While not perfect, it’s incredibly close and demonstrates that the model correctly associated the text with the chart data. For many intelligent automation tasks, this level of accuracy is a game changer.

Conclusion

Traditional RAG pipelines are powerful, but they falter when dealing with non-textual content. Tables, charts, and other visual elements are often ignored or misinterpreted.

ColPali offers a more intelligent path forward. By treating each PDF page as an image and analyzing it in contextual patches, it can process visual layouts that standard text parsers would scramble. This approach brings true vision-language intelligence to RAG systems, making them far more capable of handling the messy, multimodal reality of enterprise documents discussed by experts like Julian Yip on platforms focused on Large Language Models.