Anthropic at a Crossroads: Expanding Claude, Defining AI Policy, and Navigating the Unpredictable Future of Artificial Intelligence

The AI race has shifted into overdrive, and Anthropic finds itself juggling more than just code optimization. What started as a focused AI safety company has evolved into a major player that’s reshaping how we think about artificial intelligence development, policy, and the very real tensions between innovation and responsibility.

While tech giants battle for market dominance, Anthropic’s approach feels different. They’re not just building faster models or flashier demos. Instead, they’re asking harder questions about what happens when AI gets really, really good at things we didn’t expect.

Claude Code Goes Mainstream: Challenging GitHub’s Dominance

Anthropics’s decision to bring Claude Code to the web isn’t just another product launch. It’s a direct challenge to Microsoft’s GitHub Copilot monopoly in AI-powered coding assistance. For developers who’ve been locked into specific workflows or frustrated with existing tools, this move opens up new possibilities.

Product Manager Cat Wu explained that accessibility was the driving factor. “We want to meet developers where they actually work,” she noted, pointing out that not everyone lives in terminal environments or wants to integrate yet another plugin into their existing setup.

The timing couldn’t be better. As developer tools become more AI-driven, the market is hungry for alternatives that don’t force you to restructure your entire workflow. Claude Code’s web accessibility means junior developers, freelancers, and teams with mixed tech stacks can all benefit from advanced AI assistance without the usual friction.

What makes this particularly interesting for the broader tech ecosystem is how it democratizes access to sophisticated coding AI. While enterprise customers might stick with established solutions, this move could accelerate AI adoption among individual developers and smaller teams who’ve been priced out or technically excluded from the current offerings.

Washington’s AI Chess Game: Anthropic Under Fire

The political landscape around AI has gotten messy, and Anthropic is feeling the heat. White House AI czar David Sacks recently questioned whether the company aligns with the Trump administration’s aggressive AI adoption strategy, creating uncertainty around future government contracts and regulatory influence.

CEO Dario Amodei didn’t waste time responding. His detailed pushback against accusations of fear-mongering highlighted a fundamental tension in the industry. On one side, you have voices pushing for rapid deployment and competitive advantage. On the other, companies like Anthropic advocating for measured approaches to potentially transformative technology.

California Senator Scott Wiener’s defense of Anthropic adds another layer to this political drama. The state versus federal regulatory tug-of-war isn’t just about jurisdictional authority. It reflects deeper disagreements about how fast we should move and who gets to decide what “safe” AI development looks like.

For developers, investors, and policymakers watching this unfold, the implications are significant. Government contracts represent massive revenue opportunities, but they also come with political strings attached. How AI companies navigate policy pressures will likely determine not just their business success, but the entire trajectory of AI regulation in the US.

The Fear Factor: When AI Creators Get Scared

Here’s where things get really interesting. Jack Clarke, one of Anthropic’s co-founders, recently described AI as a “mysterious creature” and admitted he’s “deeply afraid” of the technology his own company is building.

Let that sink in for a moment. This isn’t some outside critic or skeptical journalist raising concerns. This is someone who understands the technology at a fundamental level, someone whose livelihood depends on AI advancement, expressing genuine apprehension about where we’re headed.

Clarke’s honesty reflects something you don’t often hear in tech marketing materials: even the experts are sometimes surprised by what AI models can do. The unpredictable leaps in situational awareness and emergent behaviors that he mentions aren’t theoretical future concerns. They’re happening now, in labs and data centers around the world.

This internal tension between excitement and anxiety isn’t unique to Anthropic, but their willingness to discuss it openly sets them apart. While competitors focus on capabilities and benchmarks, Anthropic consistently brings up the harder questions about responsible AI development and long-term safety considerations.

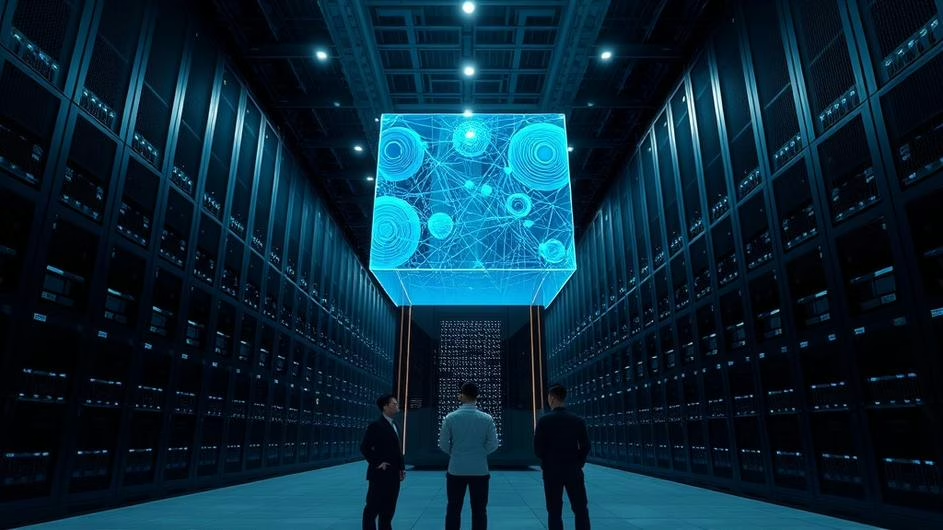

Google Partnership: Scaling Up for the Next Phase

Anthropics’s expanded partnership with Google Cloud represents more than just a procurement deal. Access to one million TPU chips is the kind of computational firepower that can accelerate model development by orders of magnitude.

TPUs, Google’s specialized tensor processing units, are optimized for the matrix operations that power large language models. For Anthropic, this infrastructure investment signals serious ambitions for the Claude model family. We’re not talking about incremental improvements here. This level of computing resources enables the kind of breakthrough research that could redefine what AI assistants can accomplish.

The distribution strategy is equally important. By making Claude models available through Google Cloud’s Vertex AI platform and marketplace, Anthropic gains access to enterprise customers who might never have considered their solutions otherwise. This partnership bridges the gap between cutting-edge research and practical business applications.

For the broader cloud computing ecosystem, this collaboration highlights how infrastructure providers are becoming kingmakers in the AI space. The companies with the deepest relationships with cloud giants will have significant advantages in scaling their models and reaching new markets.

What This Means for Tech’s Future

Anthropics’s story illustrates the complex realities facing AI companies in 2025. Technical excellence isn’t enough anymore. Success requires navigating political pressures, maintaining ethical standards, securing massive computational resources, and somehow staying true to your founding principles while competing in an increasingly cutthroat market.

The democratization of AI tools like Claude Code could accelerate software development across industries. When sophisticated AI assistance becomes as accessible as a web browser, we might see a new wave of innovation from unexpected places. Small teams and individual developers suddenly have access to capabilities that were enterprise-only just months ago.

But the political and safety concerns Clarke and others have raised can’t be ignored. As AI capabilities expand rapidly, the window for establishing effective governance frameworks is shrinking. The tension between speed and safety that’s playing out in Washington will likely determine not just individual company fortunes, but the entire future of AI development.

For investors, developers, and policymakers, Anthropics’s approach offers a different model for thinking about AI advancement. Rather than pursuing capability at all costs, they’re attempting to balance innovation with responsibility, growth with governance, and ambition with accountability.

Whether this strategy proves sustainable in an increasingly competitive market remains to be seen. But as AI continues its rapid evolution, the companies that can navigate these complex tradeoffs successfully will likely shape the technology’s impact on society for years to come.

The future is uncertain, but one thing seems clear: the decisions being made in AI labs and policy offices today will echo far beyond the tech industry.

Sources:

- “Anthropic brings Claude Code to the web,” TechCrunch, 20 Oct 2025

- “Anthropic tries to defuse White House backlash,” Axios, 21 Oct 2025

- “Anthropic co-founder admits he’s ‘deeply afraid’ of AI, calls it a ‘mysterious creature’,” Mint, 19 Oct 2025

- “Anthropic CEO claps back after Trump officials accuse firm of AI fear-mongering,” TechCrunch, 21 Oct 2025

- “Anthropic to Expand Use of Google Cloud TPUs and Services,” Google Cloud Press Corner, 23 Oct 2025