2026 Tech Momentum, From AR Glasses to Tiny Chips: How Hardware, Audio, and Startups Are Rewriting the Device Playbook

Here’s what’s becoming clear about 2026: we’re not getting one big hardware revolution, but a whole bunch of smaller ones that are starting to click together. Smartphones are getting subtle upgrades, augmented reality glasses are finally showing up in stores you actually visit, and your earbuds are getting smart enough to feel like part of your brain. The real story isn’t any single gadget, but how all these pieces are beginning to work as a team. Devices are slowly morphing from standalone products into components of a new, networked experience.

Apple’s 2026 Playbook: Polish, Reach, and a Smarter Siri

As usual, Apple looks set to dominate the headlines. Reports suggest the first half of the year will be packed with launches, including iterative iPhone refreshes, a more affordable MacBook, and a significant iOS 26.4 update that reportedly gives Siri a major overhaul. For the developer community watching closely, Apple’s moves signal two clear priorities: polish and reach. Every system-level update brings fresh APIs and tighter privacy controls, which fundamentally shapes how third-party apps interact with device sensors and on-board compute. When a platform giant reworks its core assistant and operating system, it forces everyone else to reconsider everything, from how background audio behaves to how deeply apps can integrate with local intelligence. It’s a nudge toward a more cohesive, device-aware software ecosystem.

The AR Moment Arrives (For Real This Time)

While Apple refines its core lineup, augmented reality hardware is having its long-awaited mainstream moment. 2026 looks like the year AR finally leaves the lab and hits retail shelves. Big players are moving aggressively. Amazon is reportedly developing consumer AR glasses, a move that could seamlessly blend shopping and inventory data into a user’s field of view. Snap has committed billions to its AR ambitions, and Meta continues to iterate on its Ray-Ban smart glasses despite some rollout hiccups. These investments aren’t just about cool tech, they’re about scale—manufacturing volume, retail distribution, and developer incentives. When social and retail giants start placing hardware in the market at volume, the app ecosystems usually follow.

Not every company is playing the same game, though. The market is fragmenting into clear use cases. Some, like the ROG Xreal R1 glasses pushing 240Hz for esports, are chasing absolute fidelity and blistering frame rates. Others, like the Warby Parker glasses co-developed with Google, aim for mass-market appeal by embedding AI into fashionable, everyday frames. Then there are the enterprise-first players like Lenovo, prioritizing durability, security, and vendor support over consumer flash. The message is clear: AR is no longer one category, but many, each with its own software expectations and distribution channels.

The Overlooked Hero: Smarter Audio

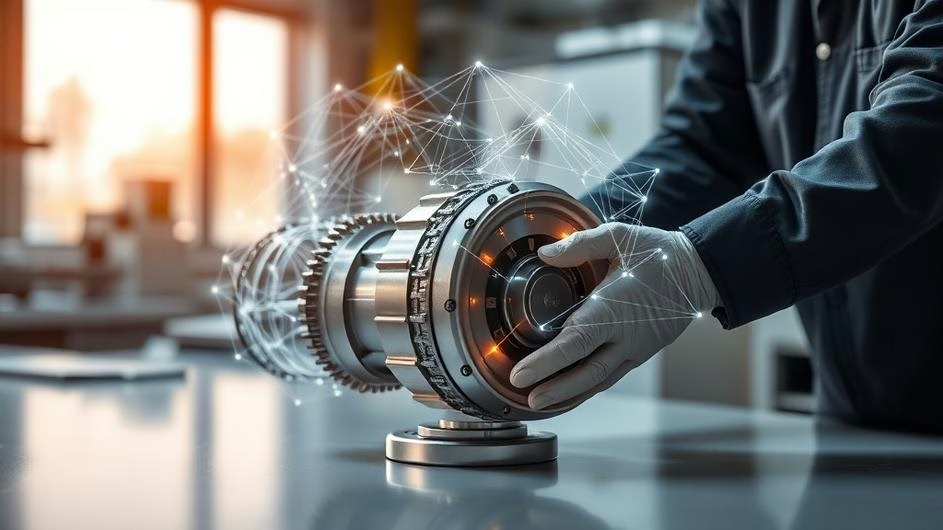

Here’s a piece of the puzzle that often gets missed in the AR conversation: audio. Sony’s recent launch of the WF-1000XM6 earbuds is a stark reminder that headphones aren’t passive accessories. They’re active participants in the extended reality story. These new buds feature Sony’s QN3e noise-cancelling processor, which the company claims is three times faster than its predecessor. Why does that matter? Active noise cancellation relies on real-time signal processing to cancel unwanted sound. A faster chip means lower latency and more adaptive cancellation. For mixed reality experiences, spatial audio and instant voice interactions are everything. You can’t have a believable AR world if the audio lags or sounds flat. In short, better earbuds don’t just play music better, they make augmented reality itself more convincing.

Where Startups Connect the Dots

All this hardware innovation ripples out into the startup world, and there’s no better place to see that than at events like TechCrunch Disrupt. These gatherings serve a dual purpose: they surface the next big idea, and they connect founders with the capital and partners needed to scale hardware-software combos. For developers and product teams, it’s a live feed of where the market is heading. The pitch battles and demo stages consistently highlight the middleware and platform tools that will let AR glasses talk to cloud services efficiently, or enable low-power earbuds to run sophisticated voice models directly on the device. It’s in these spaces that the software glue for our hardware future gets invented.

What This Means for Builders (and Everyone Else)

So, what’s the practical takeaway for developers crafting products in this environment? The old mindset of building for a single device is fading. Success now favors those who design for continuity across a network of devices. Think about seamless handoffs between your phone, glasses, and earbuds. Think about syncing user state instantly and mitigating latency at every turn.

You also need to embrace heterogeneity. With multiple form factors and competing vendor priorities, fragmentation is a given. The smart approach is to build modular architectures and lean on standards-friendly solutions, whether that’s web-based XR layers or cross-platform audio APIs.

Finally, there’s a clear push toward efficient on-device compute. Specialized chips, like the one in Sony’s new earbuds, demonstrate the tangible advantage of moving inference tasks directly to hardware when low latency and user privacy are non-negotiable. For developers, this means optimizing for edge intelligence isn’t just a nice-to-have, it’s becoming a core requirement.

For consumers, the change won’t feel like a single “wow” moment from one miraculous product. It’ll be a gradual shift toward smoother, more intuitive interactions. You’ll see AR glasses replacing routine phone tasks first in specific niches—think gaming, warehouse logistics, or in-store shopping. Over time, as mass-market designs nail the balance of style, battery life, and cost, our daily behavior might slowly tilt away from constantly pulling a phone from our pocket. Instead, we might rely more on glanceable overlays, quick voice queries, and simple gesture shortcuts.

The Road Ahead: Plumbing Before Polish

We’re not at the convergence point yet. Battery life, app quality, interoperability, and developer tools all remain significant bottlenecks. But the combined force of platform refreshes from giants like Apple, a flood of AR hardware across every price point, smarter audio components, and a vibrant startup scene creates a momentum that’s hard to dismiss.

The best way to think about 2026 is as an inflection year—the period where the underlying plumbing for a composable device future gets laid down. For developers, it’s a massive opportunity to build the shared experiences that will flow across phones, glasses, and wearables. For product leaders, it’s a mandate to prioritize modularity, low-latency compute, and graceful fallbacks. For users, it promises interactions that feel less like managing technology and more like it’s quietly working for you.

The hardware pieces are finally falling into place. The billion-dollar question now isn’t about what gets built, but who can stitch it all together into experiences people genuinely prefer to use. That’s going to be the defining story of the next two years.

Sources

- Tom’s Guide: 11 new Apple products tipped for 2026 — iPhone 17e, cheap MacBook, iOS 26.4 with new Siri and more (Mon, 09 Feb 2026)

- Glass Almanac: 7 AR Hardware And Platform Moves In 2026 That Reveal What’s Changing Now (Sun, 08 Feb 2026)

- Notebookcheck: WF-1000XM6 earbuds officially launch as Sony reveals price and features (Thu, 12 Feb 2026)

- Scene for Dummies: Tech Crunch Disrupt: Breaking Tech News, Startup Pitches & Innovations (Thu, 12 Feb 2026)

- Glass Almanac: 7 AR Glasses Releasing In 2026 That Could Upend Your Phone Habit (Mon, 09 Feb 2026)